Batch Insert Vs Single Insert Sql

Beginning with sql server 2017 14x ctp 11 bulk insert supports the csv format as does azure sql database. Bulk insert loads data from a data file into a table.

Jdbc Batch Insert Update Mysql Oracle Journaldev

When you need to bulk insert many million records in a mysql database you soon realize that sending insert statements one by one is not a viable solution.

Batch insert vs single insert sql. Note that this insert multiple rows syntax is only supported in sql server 2008 or later. The mysql documentation has some insert optimization tips that are worth reading to start with. Before sql server 2017 14x ctp 11 comma separated value csv files are not supported by sql server bulk import operations.

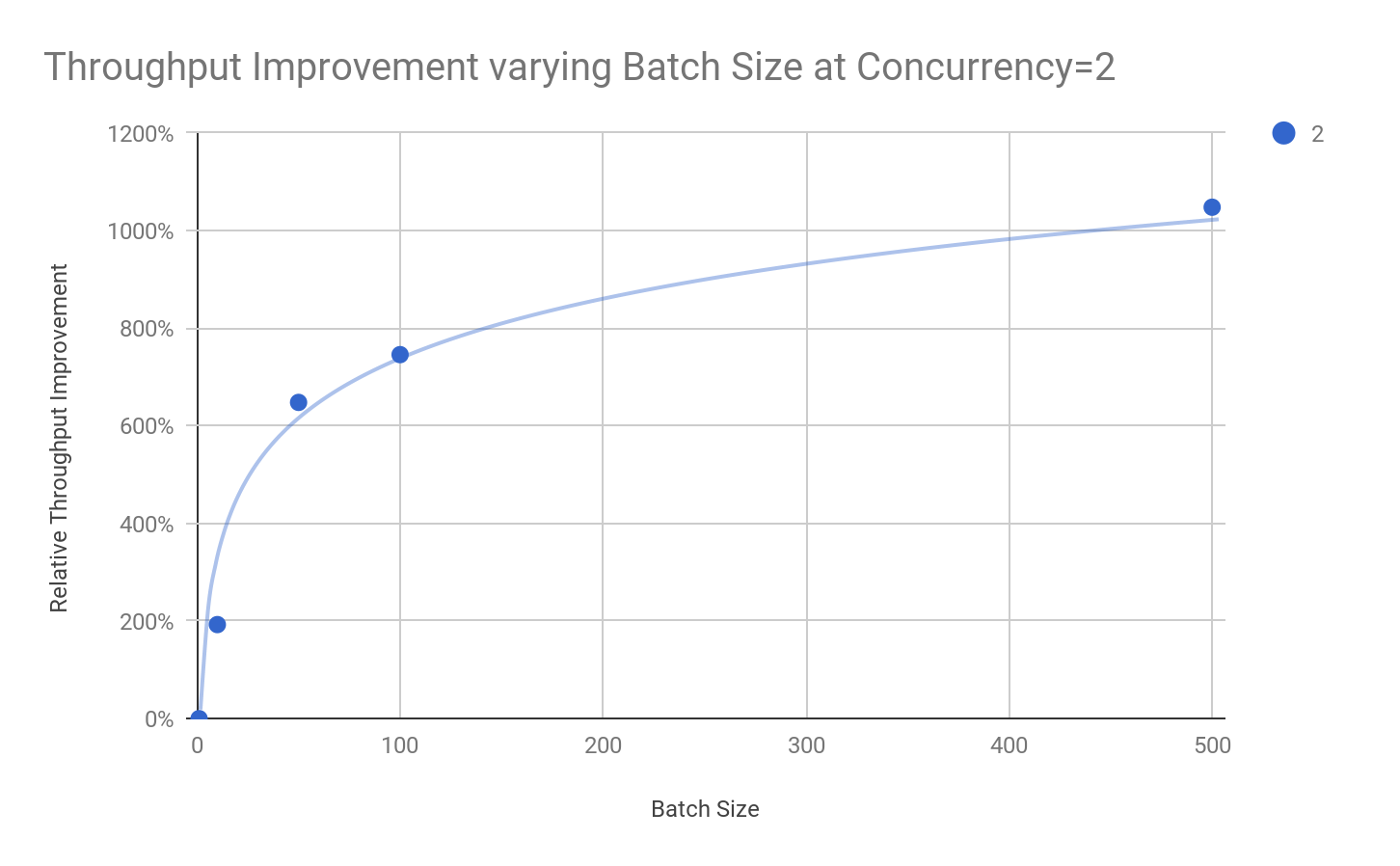

Eduardo pivaral updated. Optimize large sql server insert update and delete processes by using batches. Comparing multiple rows insert vs single row insert with three data load methods.

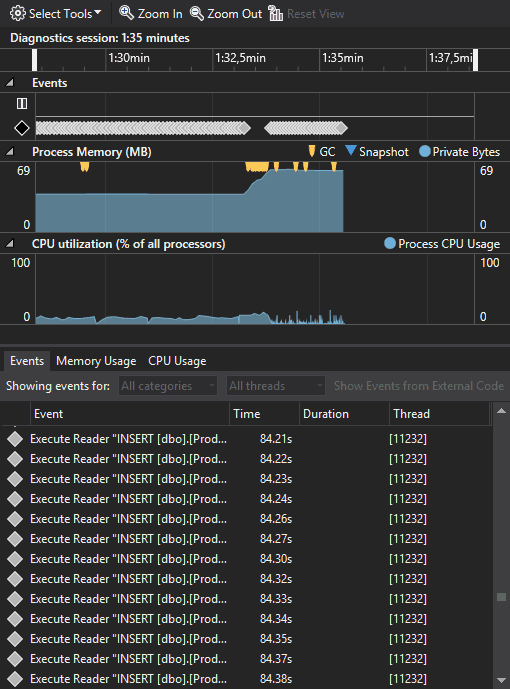

It is better and more secure to use the indexsingle dml approach. The cause of poor performance can sometimes be surprising. Bulk insert in my last post i talked about how persisting data can become the bottleneck on large high scale systems nowadays.

The number of rows that you can insert at a time is 1000 rows using this form of the insert statement. However the data file is read by the sql server process. This functionality is similar to that provided by the in option of the bcp command.

If you want to insert more rows than that you should consider using multiple insert statements bulk insert or a derived table. And 1000 individual inserts is much different from insert with 1000 values or various bulk insert methodologies. I also talked about that more and more people tend to think that databases are simply slow seeing them as just big io systems.

However in some cases a csv file can be used as the data file for a bulk import of data into sql server. If a batch does not operate on any rows the process will end as row count will be 0. The performance of extract transform load etl processes for large quantities of data can always be improved by objective testing and experiment with alternative techniques.

High speed inserts with mysql. That could be very different than 1000 rows into a 1000 rows into b 1000 rows into c etc. For a description of the bulk insert syntax see bulk insert transact sql.

Another approach for these cases is to use a temporary table to filter the. Loading data fast regular insert vs.

Bulk Insert Vs Flat File Source Anexinet

High Speed Inserts With Mysql Benjamin Morel Medium

How To Improve Iot Application Performance With Multi Row Dml

Multiple Insert Statements Vs Single Insert With Multiple Values

Entity Framework Insert Performance Paris Polyzos Blog

How To Scale A Distributed Sql Database To 1m Inserts Per Sec

Squeezing Performance From Sqlite Insertions Jason Feinstein

Multiple Insert Statements Vs Single Insert With Multiple Values

Multiple Insert Statements Vs Single Insert With Multiple Values